We are very excited to finally enable single-step image generation, which will dramatically reduce compute costs and accelerate the process.

– Fredo Durand, MIT professor of electrical engineering and computer science

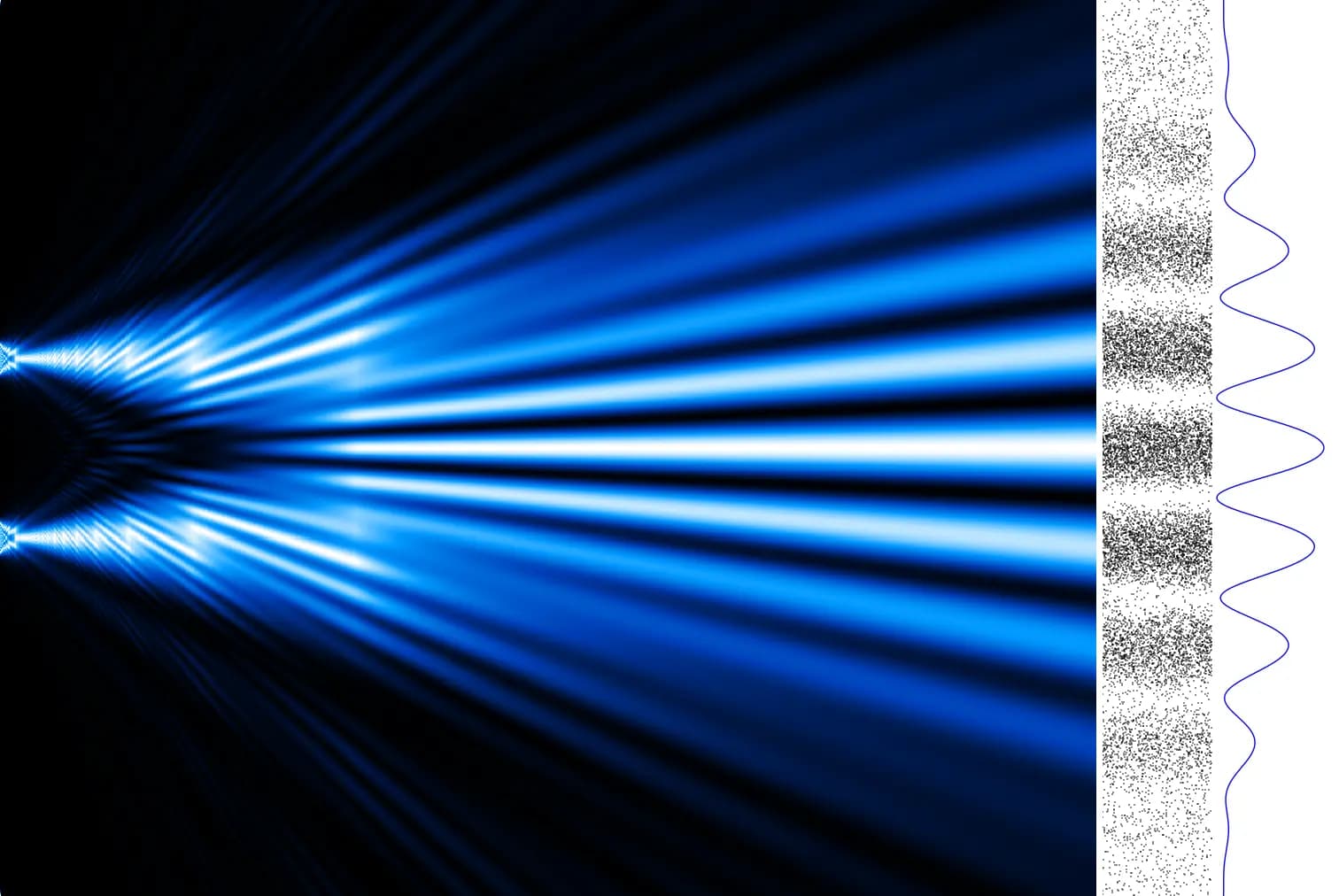

In the realm of artificial intelligence, a groundbreaking advancement has been made by researchers at the MIT Computer Science and Artificial Intelligence Laboratory (CSAIL), revolutionizing the way computers generate art. Traditionally, diffusion models have been at the forefront of AI image generation, where they iteratively refine a noisy initial state into a clear and coherent image. This process, despite its effectiveness, has been notoriously time-consuming and complex, often requiring numerous iterations to perfect an image.

The new framework introduced by CSAIL researchers streamlines this cumbersome multi-step process into a singular, swift action. This leap in efficiency is achieved through a method known as distribution matching distillation (DMD), which essentially trains a new, simpler model to emulate the behavior of more complex, pre-existing models. This not only preserves the high quality of the generated images but also accelerates the generation process by a staggering thirty times.

Tianwei Yin, the lead researcher and an MIT PhD student, emphasized the significance of this development. He explained that their method combines the principles of generative adversarial networks (GANs) with those of diffusion models, enabling the generation of visual content in a single step, as opposed to the hundreds of iterations required previously. This innovation could drastically reduce computational time while maintaining or even surpassing the visual quality of the images produced.

The potential applications of this single-step diffusion model are vast and varied. From enhancing design tools for faster content creation to advancing fields like drug discovery and 3D modeling, the efficiency and effectiveness of this technology could have far-reaching implications.

The DMD framework is particularly ingenious for its dual-component strategy. It utilizes a regression loss to stabilize the training process by anchoring the mapping of the image space, and a distribution matching loss to ensure the generated images accurately reflect their real-world frequencies. This is made possible by employing two diffusion models as guides, which help the system discern between real and generated images, thereby streamlining the training of the rapid one-step generator.

Furthermore, the researchers achieved this breakthrough by utilizing pre-trained networks for the new “student” model, allowing for quick adaptation and fine-tuning from the original models. This approach ensures the fast and efficient training of the new model, capable of producing high-quality images that are comparable to those generated by more complex models.

In tests against conventional methods, the DMD framework demonstrated impressive performance across various benchmarks. Notably, it achieved remarkably close results to the original models in terms of image quality and diversity, as indicated by its near-perfect Fréchet inception distance (FID) scores. While there is still room for improvement in more complex text-to-image tasks, the current achievements of DMD signify a significant step forward in the field of AI image generation.

This breakthrough not only heralds a new era in the efficiency and quality of AI-generated images but also opens up exciting possibilities for real-time visual editing and content creation. With the support of prestigious institutions and the collaborative effort of leading researchers, the DMD framework is poised to redefine the landscape of artificial intelligence and digital artistry.

Link to the original article here

Responses (0 )