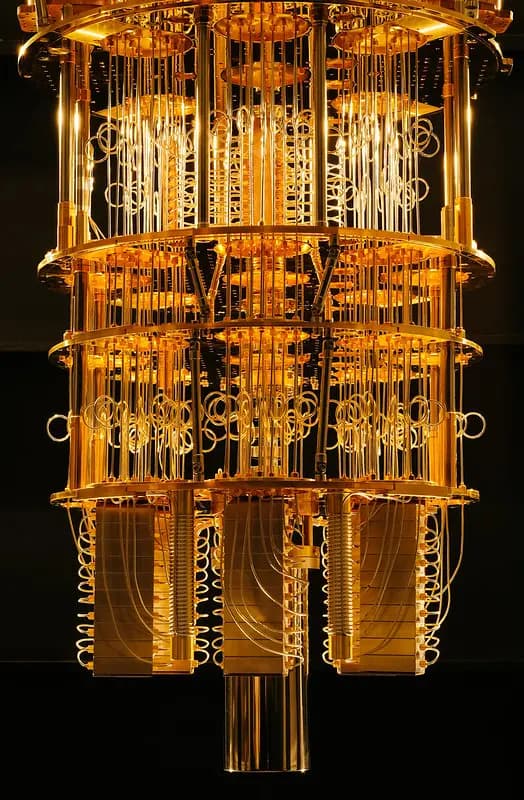

Deep-learning models are revolutionizing numerous fields, from healthcare diagnostics to financial forecasting. However, their immense computational demands necessitate the use of powerful cloud-based servers. This reliance on cloud computing introduces significant security vulnerabilities, especially when handling sensitive data like patient medical records.

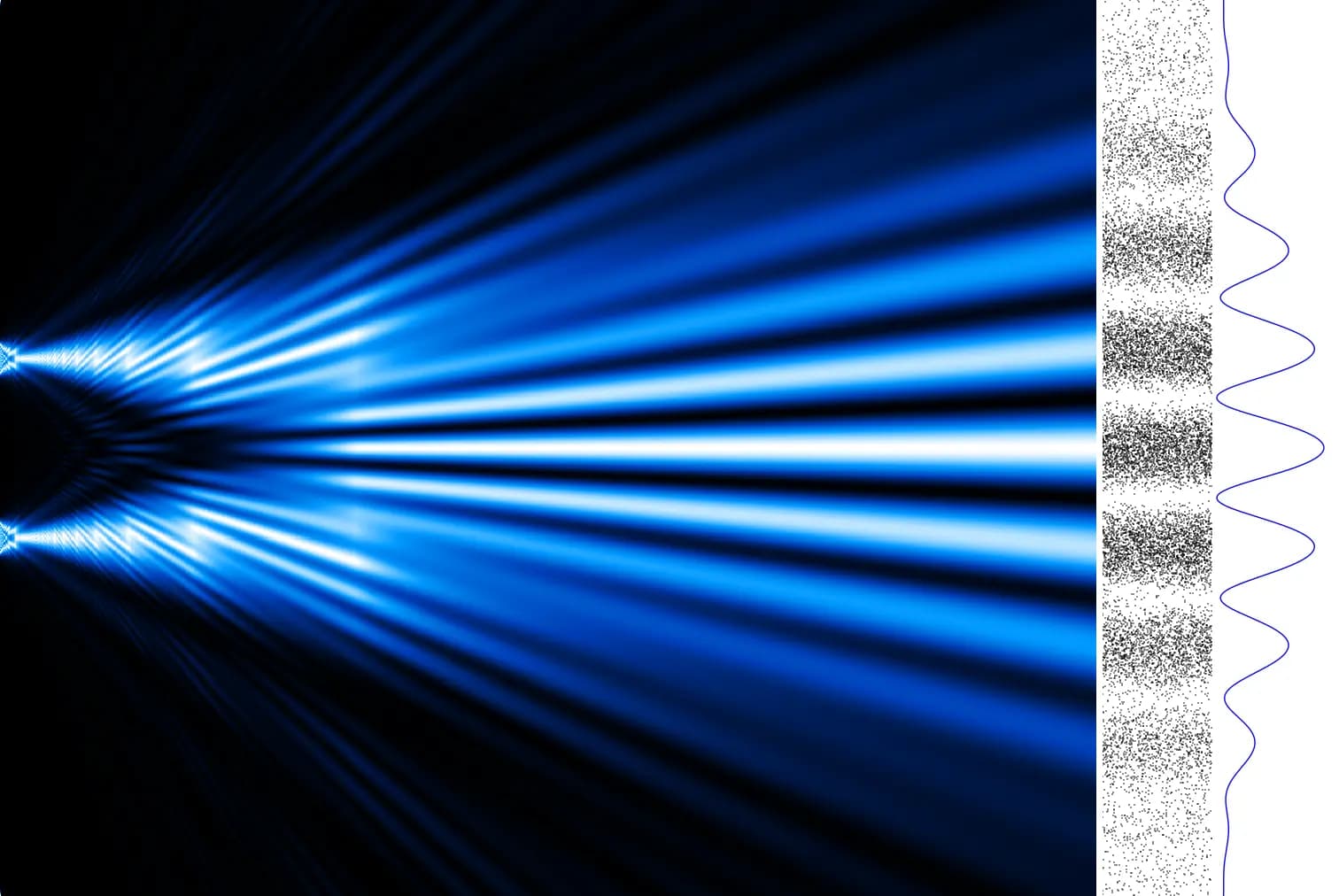

To address this challenge, MIT researchers have pioneered a security protocol that harnesses the quantum properties of light to ensure the security of data transmitted to and from cloud servers during deep-learning computations. By encoding data into laser light used in fiber optic communication systems, the protocol exploits fundamental principles of quantum mechanics, rendering it impossible for attackers to copy or intercept information without detection.

“Deep learning models like GPT-4 have unprecedented capabilities but require massive computational resources. Our protocol enables users to harness these powerful models without compromising the privacy of their data or the proprietary nature of the models themselves,” explains Kfir Sulimany, an MIT postdoc in the Research Laboratory for Electronics (RLE) and lead author of a paper on this security protocol.

The protocol establishes a two-way security channel for deep learning. A client, such as a hospital, can use a cloud-based deep learning model without revealing patient data. Simultaneously, the server, perhaps a company like OpenAI, can protect its proprietary model. “Both parties have something they want to hide,” adds Sri Krishna Vadlamani, a co-author of the paper and an MIT postdoc.

This innovation hinges on the principle that quantum information cannot be perfectly copied, a concept known as the no-cloning principle. The server encodes the weights of a deep neural network into an optical field using laser light. The client can then use this encoded information to perform computations on their private data without the server ever accessing the data itself. The protocol also prevents the client from copying the model’s weights due to the quantum nature of light.

The researchers demonstrated the practicality of their approach, achieving 96 percent accuracy in deep neural network computations while maintaining robust security. The protocol ensures that any leaked information remains insignificant, preventing adversaries from accessing sensitive data or proprietary model details.

You can be guaranteed that it is secure in both ways — from the client to the server and from the server to the client,

Sulimany emphasizes.

This breakthrough has the potential to revolutionize cloud-based deep learning applications, particularly in privacy-sensitive fields. Future research aims to explore its application in federated learning, where multiple parties collaboratively train a central deep-learning model, and in quantum operations, which could further enhance accuracy and security.

Eleni Diamanti, a CNRS research director at Sorbonne University in Paris, who was not involved with this work, comments, “This work combines in a clever and intriguing way techniques drawing from fields that do not usually meet, in particular, deep learning and quantum key distribution. By using methods from the latter, it adds a security layer to the former, while also allowing for what appears to be a realistic implementation. This can be interesting for preserving privacy in distributed architectures. I am looking forward to seeing how the protocol behaves under experimental imperfections and its practical realization.”

Responses (0 )