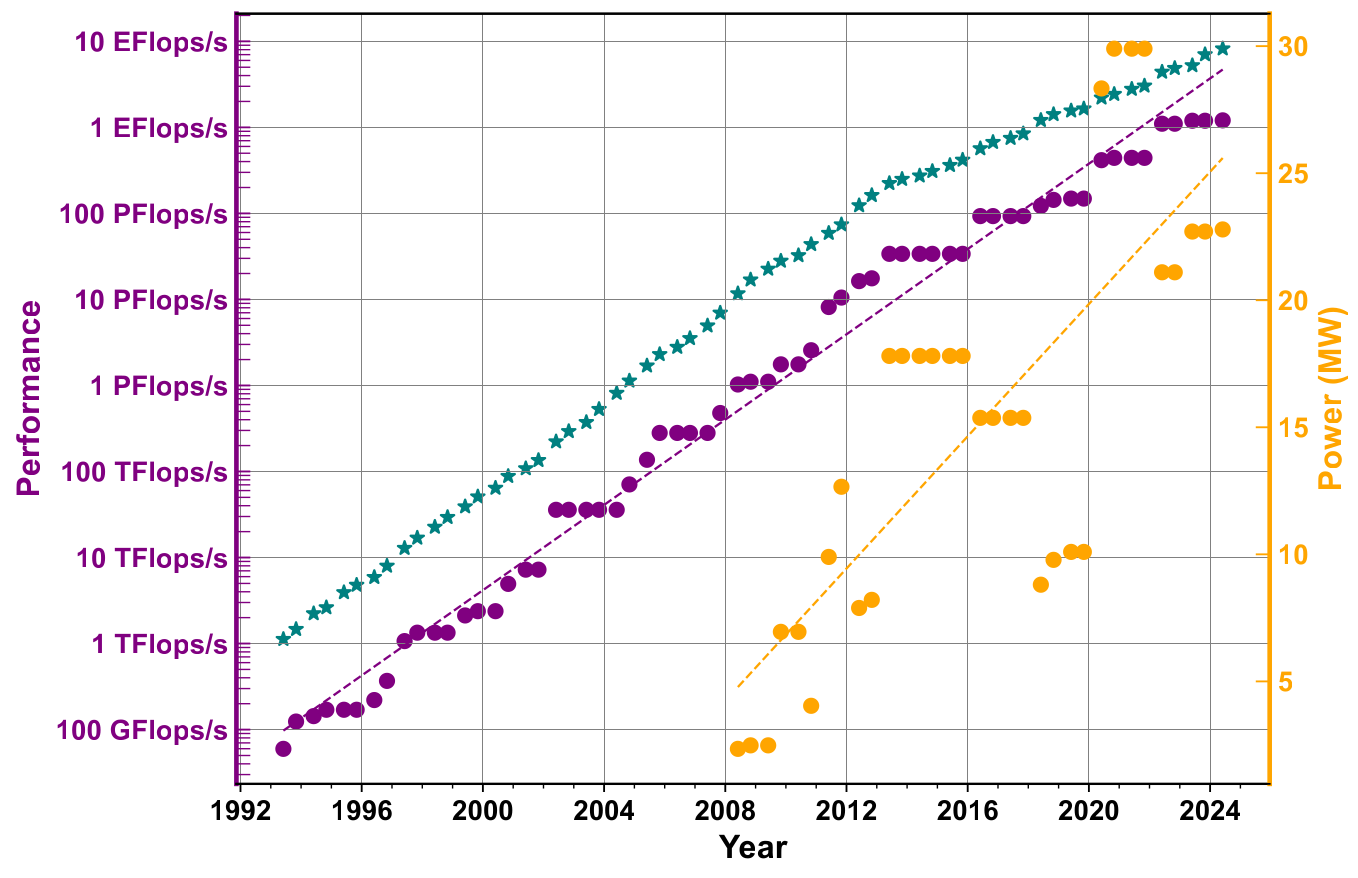

The move into the exascale era, where computers can perform a quintillion operations per second, marks a thrilling transformation for quantum mechanical (QM) simulations. But this transition isn’t simply about raw computational horsepower. As discussed by the authors at the University of Twente, Netherlands, in their recent Nature Reviews Physics Perspective, the landscape of high-performance computing (HPC) is undergoing a profound shift, one that demands a total rethinking of how we develop and use scientific software.

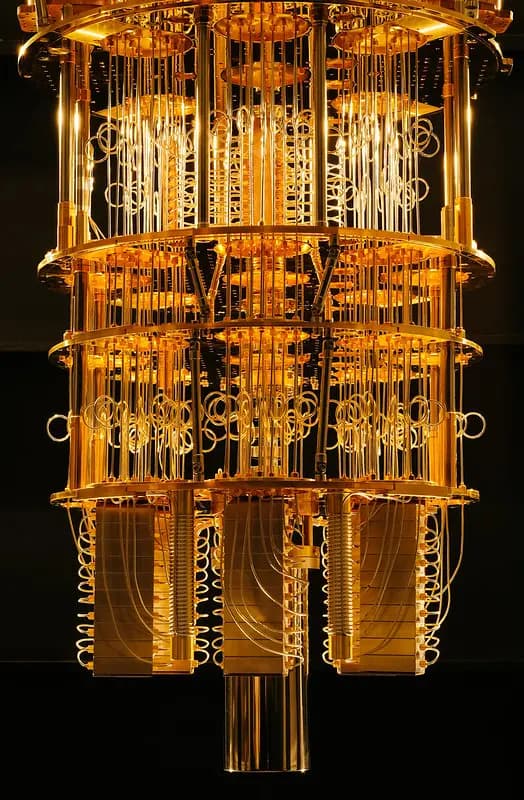

Traditionally, QM simulations thrived on the steady scaling of CPU cores and clock speeds. However, exascale computing is now defined by heterogeneous architectures, where CPUs are increasingly paired with GPUs. GPUs offer massive parallelism and speed, especially for dense matrix operations, but they also introduce serious complexities. Algorithms originally designed for CPUs often struggle to harness the true potential of these new systems.

This new terrain requires fresh strategies. QM software must adapt to exploit GPUs efficiently, often involving major rewrites and new programming models. Complicating matters further, the software ecosystem has fragmented: developers now must navigate multiple, vendor-specific tools like CUDA, HIP, and SYCL, or rely on community-driven abstraction layers like Kokkos and Alpaka.

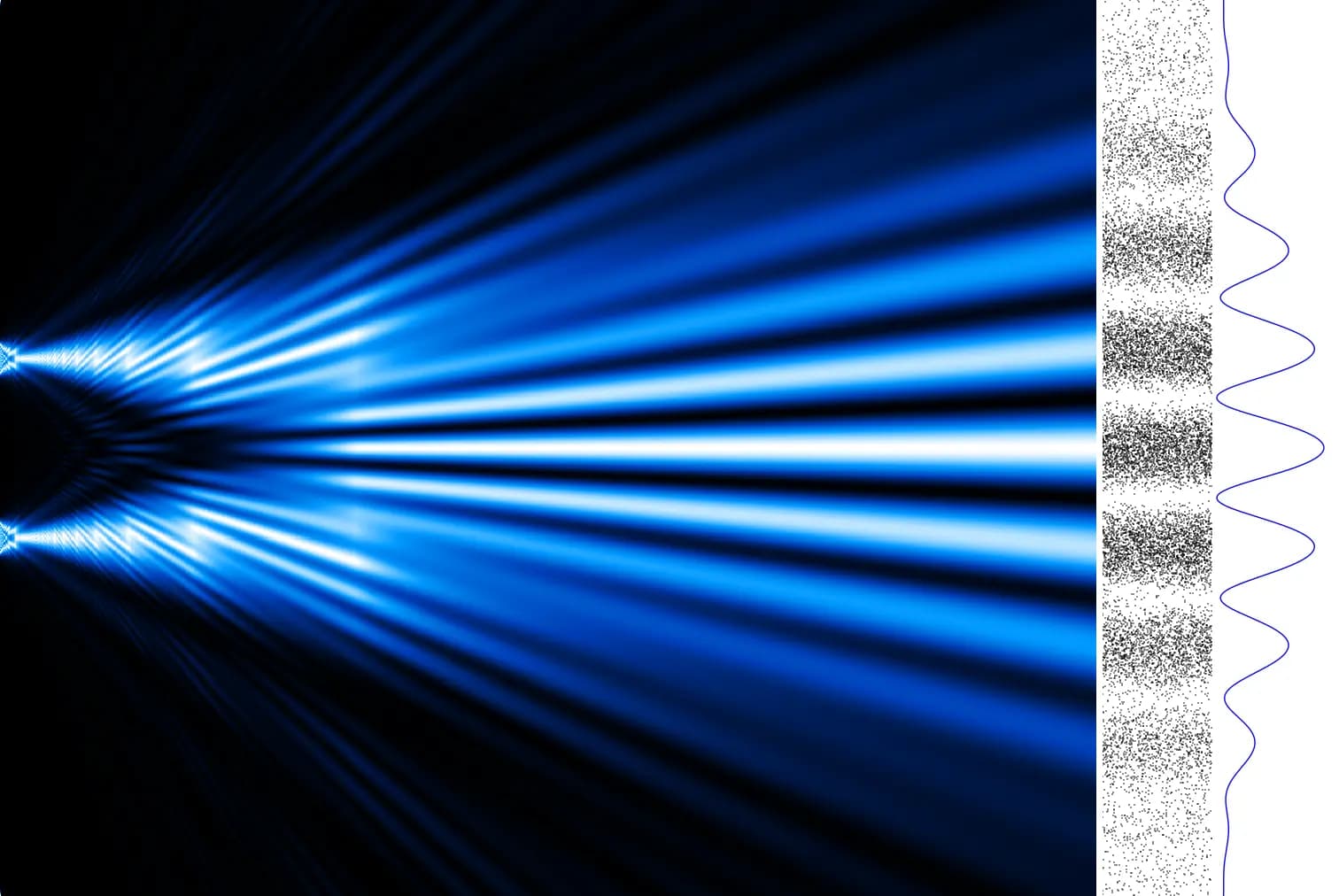

Quantum mechanical methods, whether based on density functional theory (DFT), coupled cluster theories, or quantum Monte Carlo techniques, are feeling the pressure. Mean-field methods like Hartree-Fock and DFT, while more GPU-friendly, still face challenges in scaling post-Hartree-Fock methods requiring complex tensor operations. Meanwhile, new approaches—such as mixed-precision algorithms and machine learning-augmented simulations—are offering exciting paths forward by drastically cutting computational costs without sacrificing accuracy.

Ultimately, succeeding in the exascale era requires a collaborative effort. Researchers must not only optimize algorithms for GPU-rich environments but also embrace standardized, modular software libraries and energy-efficient computing. It’s a call to rethink legacy codes, foster stronger ties between academia and industry, and embed AI more deeply into scientific workflows.

As exascale systems open unprecedented opportunities for material discovery, molecular design, and fundamental science, one thing is clear: the future belongs to those ready to rethink, rebuild, and innovate across both hardware and software frontiers.

The article can be accessed at the publisher’s website (Link: https://www.nature.com/articles/s42254-025-00823-7).

Responses (0 )