Introduction

Artificial Intelligence (AI) models have been at the forefront of technological advancements, revolutionizing industries from healthcare to finance. The development and deployment of these models involve significant computational resources, particularly during the training and inference phases. Analyzing the data from various AI systems, we can observe an exponential growth in the computation required for both training and inference. This growth has profound implications for energy usage and hardware costs, which are critical factors in the sustainability and accessibility of AI technologies.

The Growth of AI Model Training and Inference Computation

The number of parameters in AI models has skyrocketed over the past decade. Early models, such as the first iterations of neural networks, had relatively few parameters. However, contemporary models, particularly those in natural language processing (NLP) like GPT-3 and its successors, have billions of parameters. This increase in model size is driven by the need for greater accuracy and functionality, as larger models can capture more complex patterns in data.

Training Computation Requirements

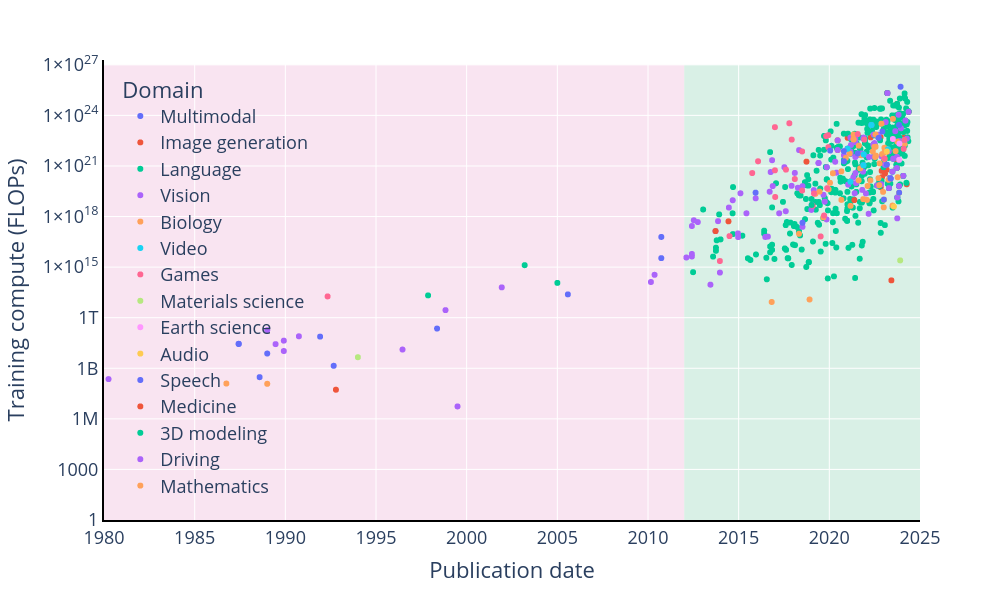

Training these massive models requires an enormous amount of computational power. The data shows a clear trend: as the number of parameters increases, so does the training compute, measured in floating-point operations per second (FLOP). This relationship is evident in the scatter plot below, which depicts the exponential rise of training computation required for various AI models across various domains.

Implications for Energy Usage

Implications for Energy Usage

The exponential growth in training compute has significant energy implications. Training large models consumes substantial electricity, contributing to the overall carbon footprint of AI development. This energy consumption not only impacts the environment but also increases operational costs for organizations developing these models. As AI becomes more pervasive, addressing the energy efficiency of training processes is crucial for sustainable development.

The Role of Inference in AI Deployment

While training is computationally intensive, inference—the phase where the model is used to make predictions—also requires considerable resources, especially for large models. The latency and compute requirements for real-time inference in applications like autonomous driving or real-time language translation necessitate powerful hardware and efficient algorithms.

Hardware Costs and Accessibility

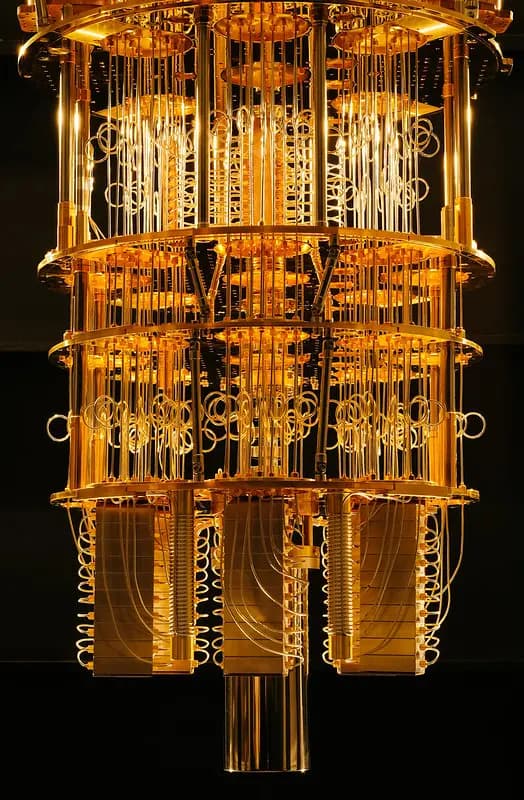

The need for advanced hardware to support AI model training and inference has driven up costs. Specialized hardware, such as Graphics Processing Units (GPUs) and Tensor Processing Units (TPUs), are essential for handling the massive parallel computations required by modern AI models. However, these hardware components are expensive, creating barriers to entry for smaller organizations and researchers.

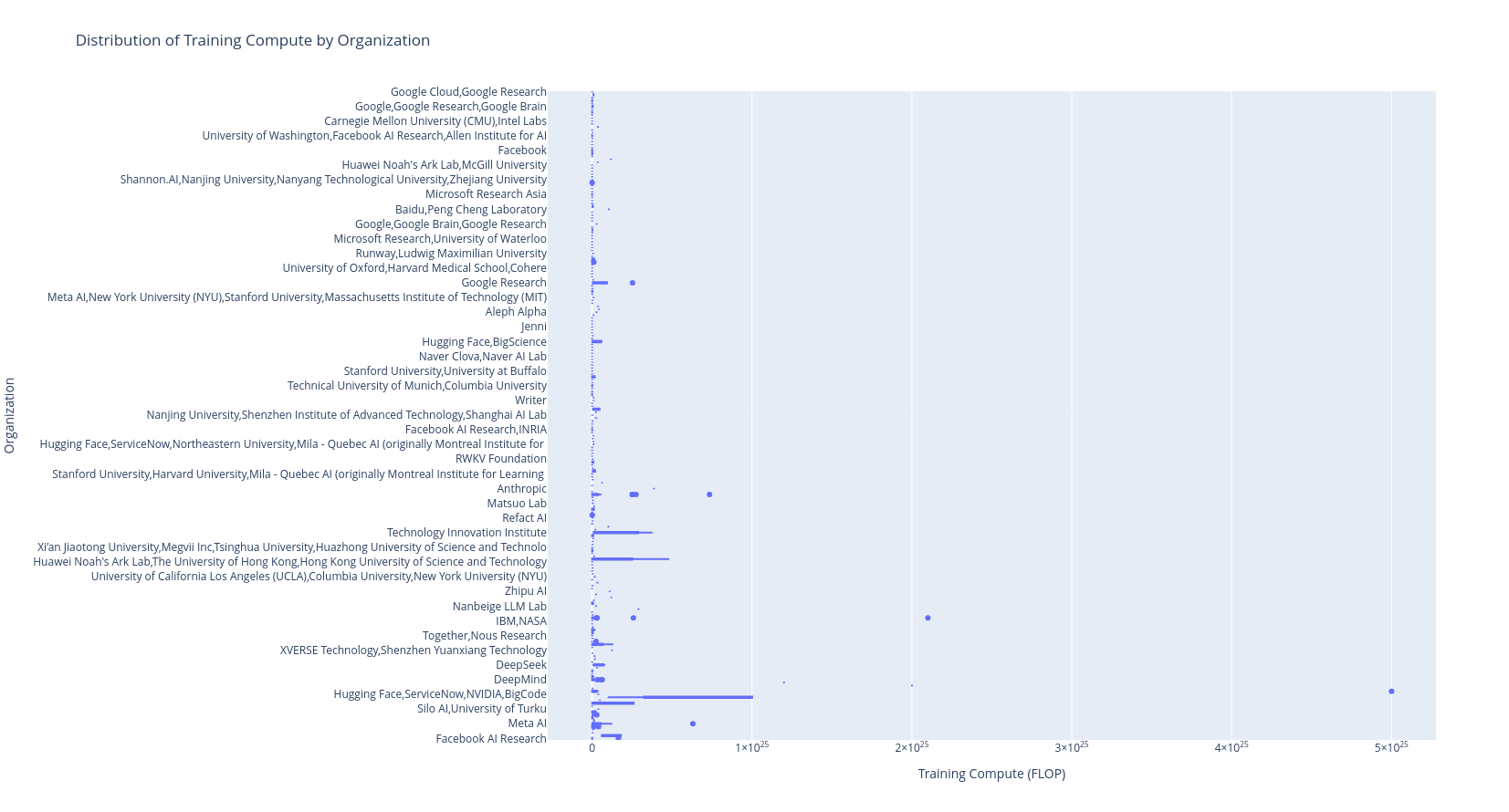

Distribution of Training Compute by Organization

A box plot of training computation by organization reveals the disparities in computational resources available to different entities. Larger organizations with substantial funding can afford the high costs associated with state-of-the-art AI development, while smaller players may struggle to keep up.

Correlation Between Key Features

Correlation Between Key Features

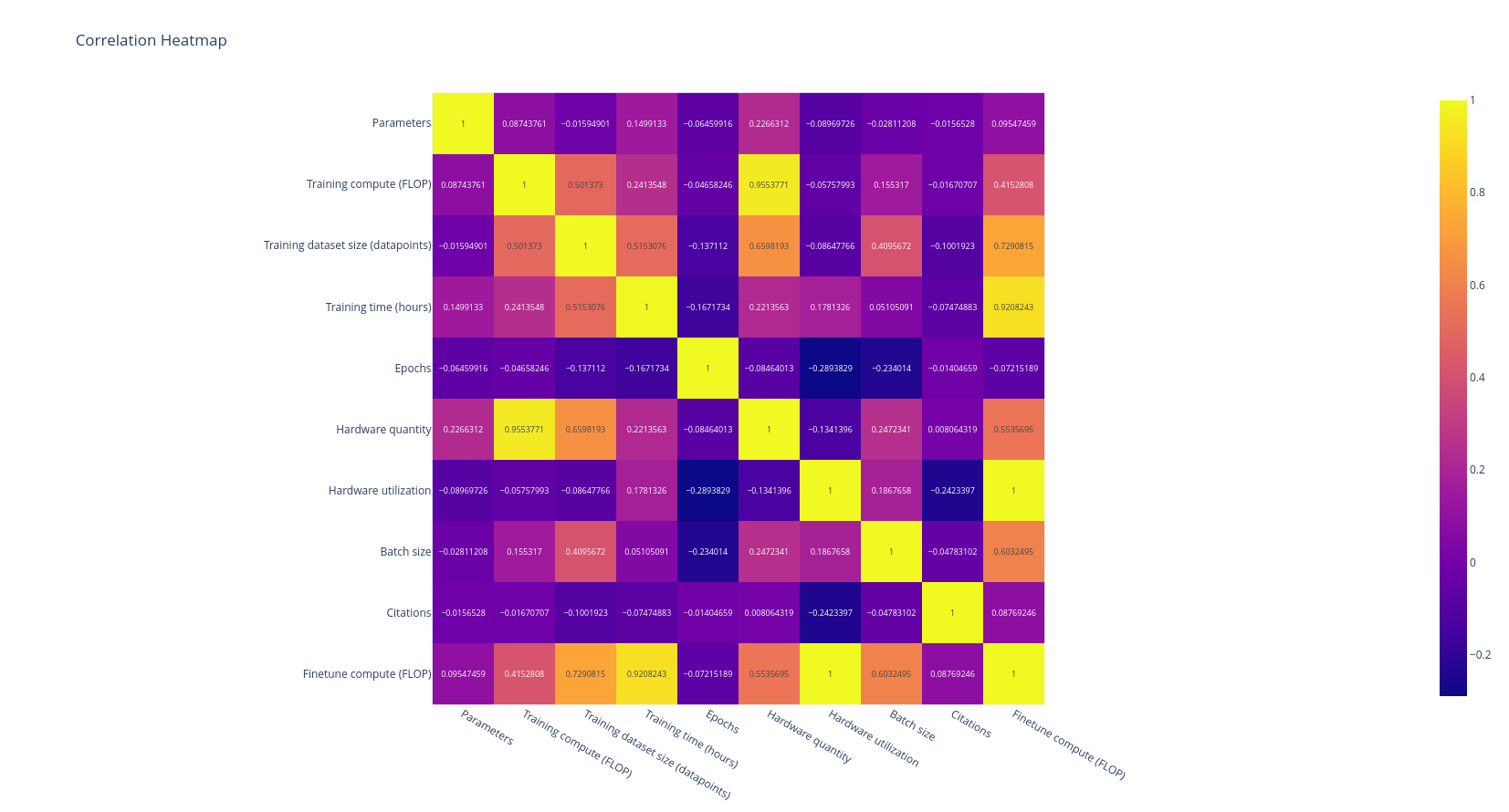

Analyzing the correlation between various numerical features in the dataset provides insights into the factors driving the growth of AI models. A correlation heatmap highlights the relationships between parameters, training compute, and other critical variables, offering a comprehensive view of the AI development landscape.

Conclusion

Conclusion

The exponential growth in AI model parameters and the corresponding increase in computational requirements for training and inference have profound implications for energy usage and hardware costs. As AI continues to evolve, addressing these challenges is essential to ensure sustainable and equitable development. Organizations must invest in energy-efficient technologies and consider the environmental impact of their AI endeavors. Moreover, democratizing access to advanced computational resources can help smaller entities contribute to and benefit from the AI revolution.

By understanding these trends and their implications, we can work towards a future where AI advancements are both innovative and sustainable, benefiting society as a whole.

The cleaned AI model data can be downloaded from this link.

Responses (0 )